Docker logging using filebeat

I’ve been looking for a good solution for viewing my docker container logs via Kibana and Elasticsearch while at the same time maintaining the possibility of accessing the logs from the docker community edition engine itself that sadly lacks an option to use multiple logging outputs for a specific container.

Before I got to using filebeat as a nice solution to this problem, I was using fluentd from inside a docker container on the same host. This forced me to change the docker logging type to fluentd, after which I could no longer access the logs using the docker logs command. To circumvent this shortcoming in practice I would end up disabling filebeat for that container and then restart it. That made me look for a better solution.

So, how to set filebeat up for ingesting logs from docker containers? I presume you already have elasticsearch and kibana running somewhere. If not, I’ve got an easy-to-run docker-compose.yml example that helps you run a 3-node elasticsearch cluster with Kibana to easily experiment with those two locally, for a start.

Now for running filebeat, I run it from a container itself and provide it with access to the docker socket. Probably not the best choice for a secure production environment, but very easy and effective. This is the docker-compose file with filebeat added to it:

services:

filebeat:

image: myregistry.azurecr.io/filebeat:latest

container_name: filebeat

hostname: mydockerhost

restart: unless-stopped

environment:

- ELASTICSEARCH_HOSTS=elasticsearch1:9200,elasticsearch2:9200

labels:

co.elastic.logs/enabled: "false"

volumes:

- type: bind

source: /var/run/docker.sock

target: /var/run/docker.sock

- type: bind

source: /var/lib/docker

target: /var/lib/docker

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "2"

With regards to co.elastic.logs/enabled: "false" in the docker-compose.yml file for the filebeat container above, this is to exempt this container from including its own container logfiles from being ingested.

I also set the hostname directive for the filebeat service so logs end up in elastic search with a reference to the actual docker hostname they where run on.

And this is the Dockerfile for building the above customized filebeat container. It uses the official filebeat docker image provided by Elastic.co:

FROM docker.elastic.co/beats/filebeat:7.9.1

COPY filebeat.docker.yml /usr/share/filebeat/filebeat.yml

USER root

RUN chown root:filebeat /usr/share/filebeat/filebeat.yml

RUN chmod go-w /usr/share/filebeat/filebeat.yml

And this is my filebeat.docker.yml that is copied into the container:

filebeat.config:

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

filebeat.autodiscover:

providers:

- type: docker

hints.enabled: true

processors:

- add_cloud_metadata: ~

output.elasticsearch:

hosts: '${ELASTICSEARCH_HOSTS:elasticsearch:9200}'

setup.ilm:

setup.ilm.enabled: auto

setup.ilm.rollover_alias: "filebeat"

setup.ilm.pattern: "{now/d}-000001"

This configuration is somewhat tailored to my specific needs, but in general I only replace the default filebeat configuration with the autodiscover module module and have it send logs directly to the elasticsearch cluster. I’ve added ${ELASTICSEARCH_HOSTS:elasticsearch:9200} as a means to override the elasticsearch location(s). Besides, I let filebeat manage the filebeat-* indices via an Index Lifecycle Management (ILM) policy, which has been working well for me.

The above code blocks are also contained in a just run and it works™ example on github.

What springs to my mind is that messages from some processes in some containers could be further processed. Filebeat can help with this in all kinds of ways, which is documented with the autodiscover module.

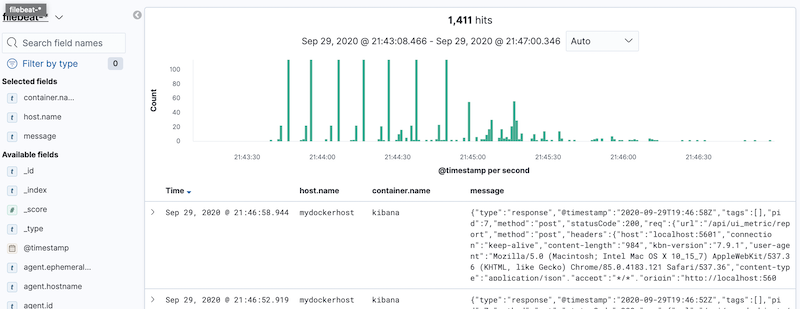

Now with elasticsearch, kibana and filebeat instances ingesting the logs for docker containers on the same host as the filebeat container, I can not only easily access unprocessed (raw) container log output using Kibana (via http://localhost:5601 (after you create an Index Pattern for filebeat-* for it), but also look at the container logs via the default docker logging to file mechanism (eg. docker logs -f <container_name>). Hooray!